Key Takeaways

- New Android features include AI summary for text messages and AI captions for images, appealing to tech-savvy users worldwide.

- Access Google’s AI model, Gemini, in Messages app for drafting messages, brainstorming, and planning events easily; rolling out in beta.

- Google Maps & Lens collaborate for an audio feature to read business information, while Docs allows handwritten notes for feedback on Android.

Google kicked off its MWC 2024 presence by announcing several new features for Android phones, tablets, and Wear OS devices. Android Auto is even getting in on the AI-fun with a new feature that summarizes long text messages or noisy group chats (we all belong to at least one). As is the current trend for all tech companies and announcements, there’s plenty of AI to go around with Google’s latest Android features, whether you like it or not.

Google says the new features will start rolling out right away, but as any Android user knows, just because a rollout has started, it can take a while before they reach your device. So be patient. In the meantime, here’s a quick rundown of all the features coming to your Android device soon(ish).

What is Gemini? Google’s AI model and GPT-4 alternative explained

Gemini is here and outperforming GPT-4, by integrating text, images, video, and sound. Here’s everything you need to about Google’s AI model.

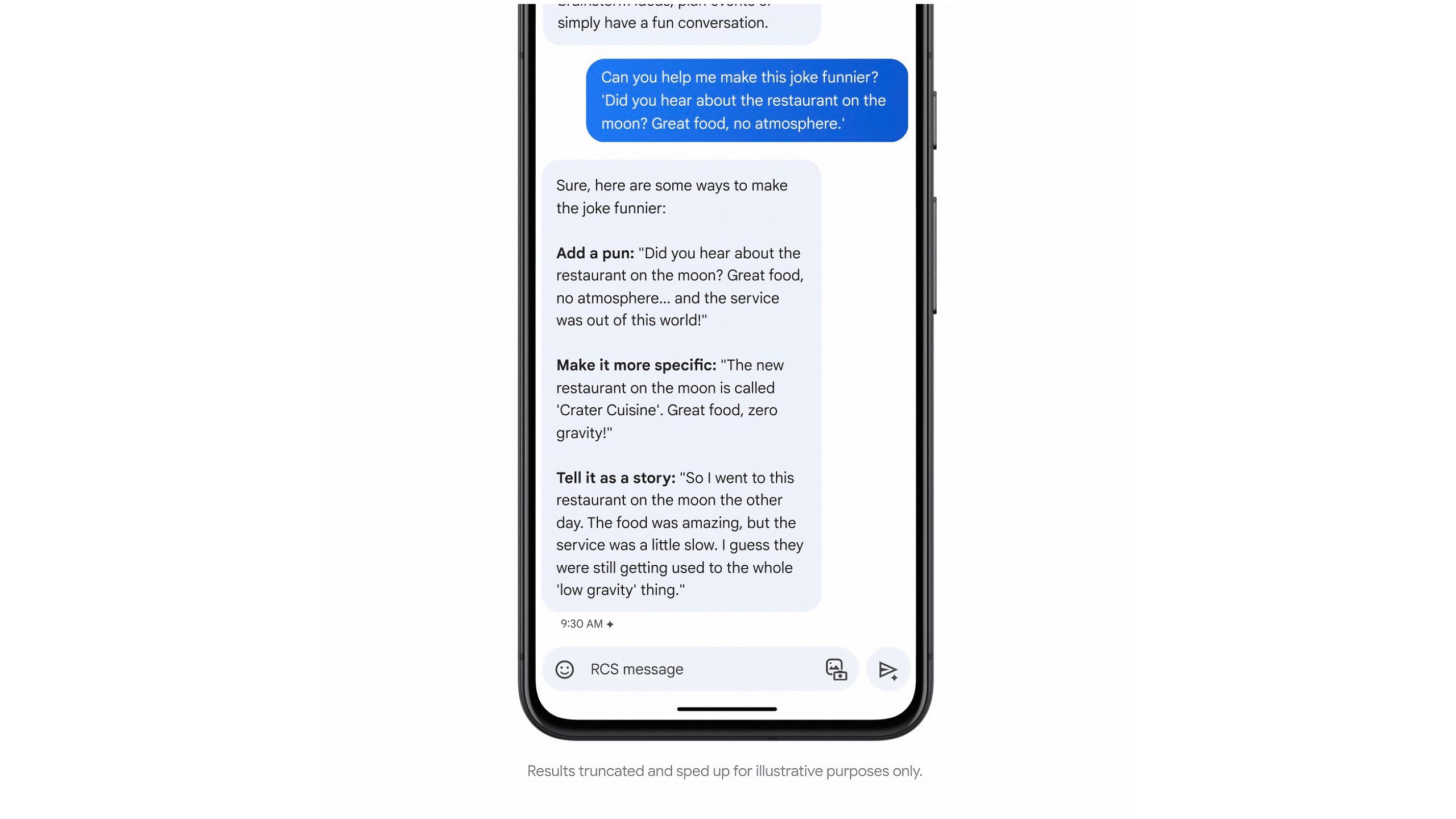

Chat with Gemini in Messages

Chat with Gemini in Messages

For those times when no one’s replying to your texts

Gemini, Google’s generative AI, is coming to Google’s Messages app, where you can talk to the AI just like you would one of your friends. Google says you can use the Chat with Gemini feature to draft a new message, brainstorm ideas, plan an event (like a trip with friends, or a date night), or have a conversation with the AI. Gemini in the Messages app will launch in beta in English. Using Chat with Gemini sounds way easier than going to a separate website or launching a separate app to talk to Google’s AI.

Android’s getting more accessible

Hear captions for images, generated by AI

Another AI feature coming to Android could be a game-changer for those with visual impairments. Once available on your Android device, you can listen to AI-generated captions for photos, online images, and pictures included in messages. The feature is rolling out globally, in English to start, but we fully expect Google to release it in other languages at some point.

Google Maps will read business information out loud

Google Maps and Lens work well together, and they’re going to get a little more useful with Google adding the ability to point your phone’s camera at something in your field of vision and have TalkBack read the place’s information to you. This new feature will come in handy if you want a quick way to hear business hours, ratings, or directions, whether you have low vision or not.

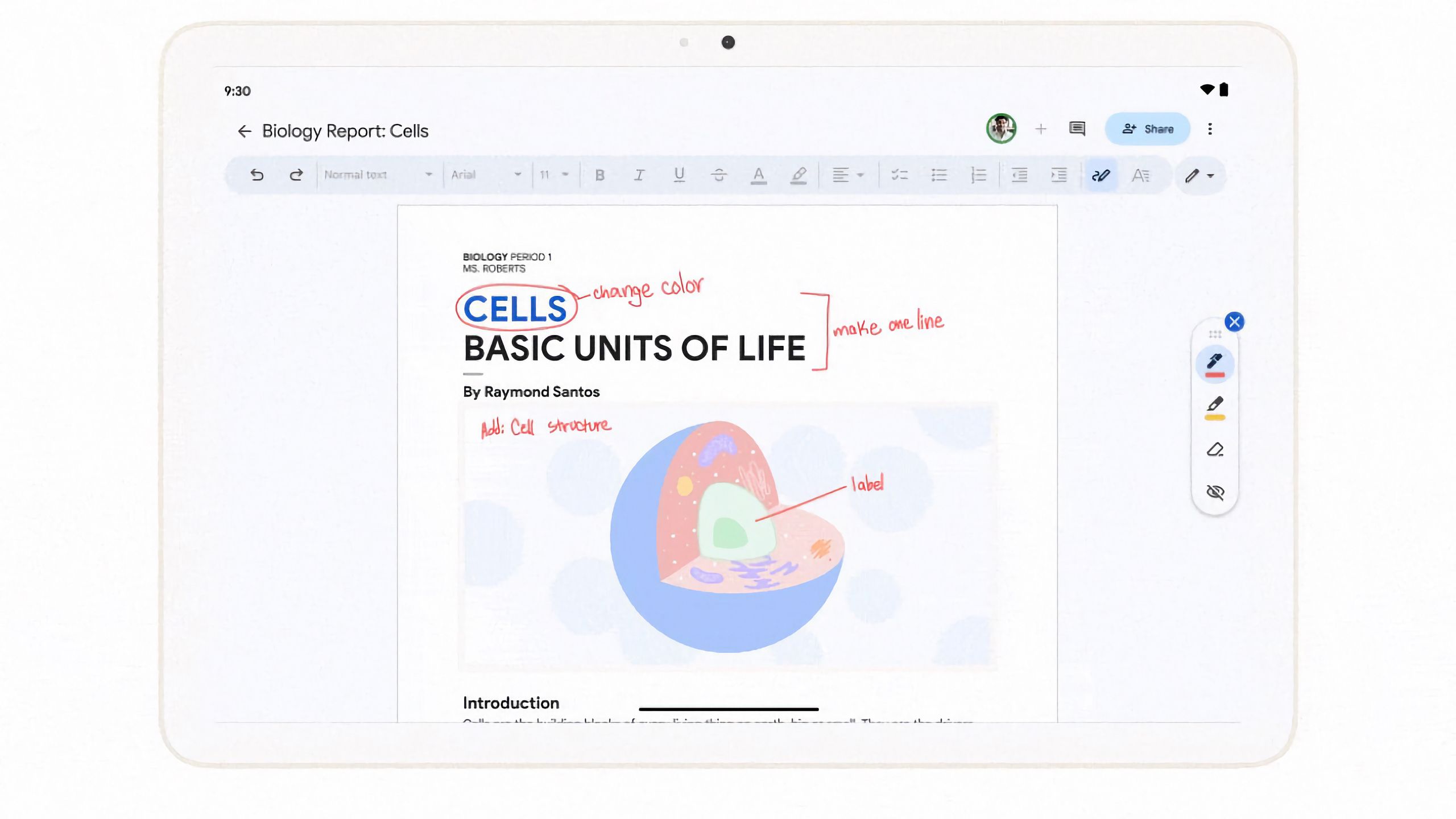

Handwritten feedback in Google Docs

Handwritten feedback in Google Docs

Google Docs is an app better suited to larger devices with full keyboards. However, that’s about to change, as Google is rolling out a feature designed to let you suggest changes and provide feedback using handwritten notes, directly from your Android phone or tablet. According to Google, you can use your finger or a stylus, meaning markup in Docs isn’t limited to the latest Samsung Galaxy S24 Ultra or the like. There are different pen colors and highlighters, so you can provide personalized feedback on a document.

How to turn on dark mode in Google Docs, Sheets, and Slides

For both Android and iOS users!

Google/Pocket-lint

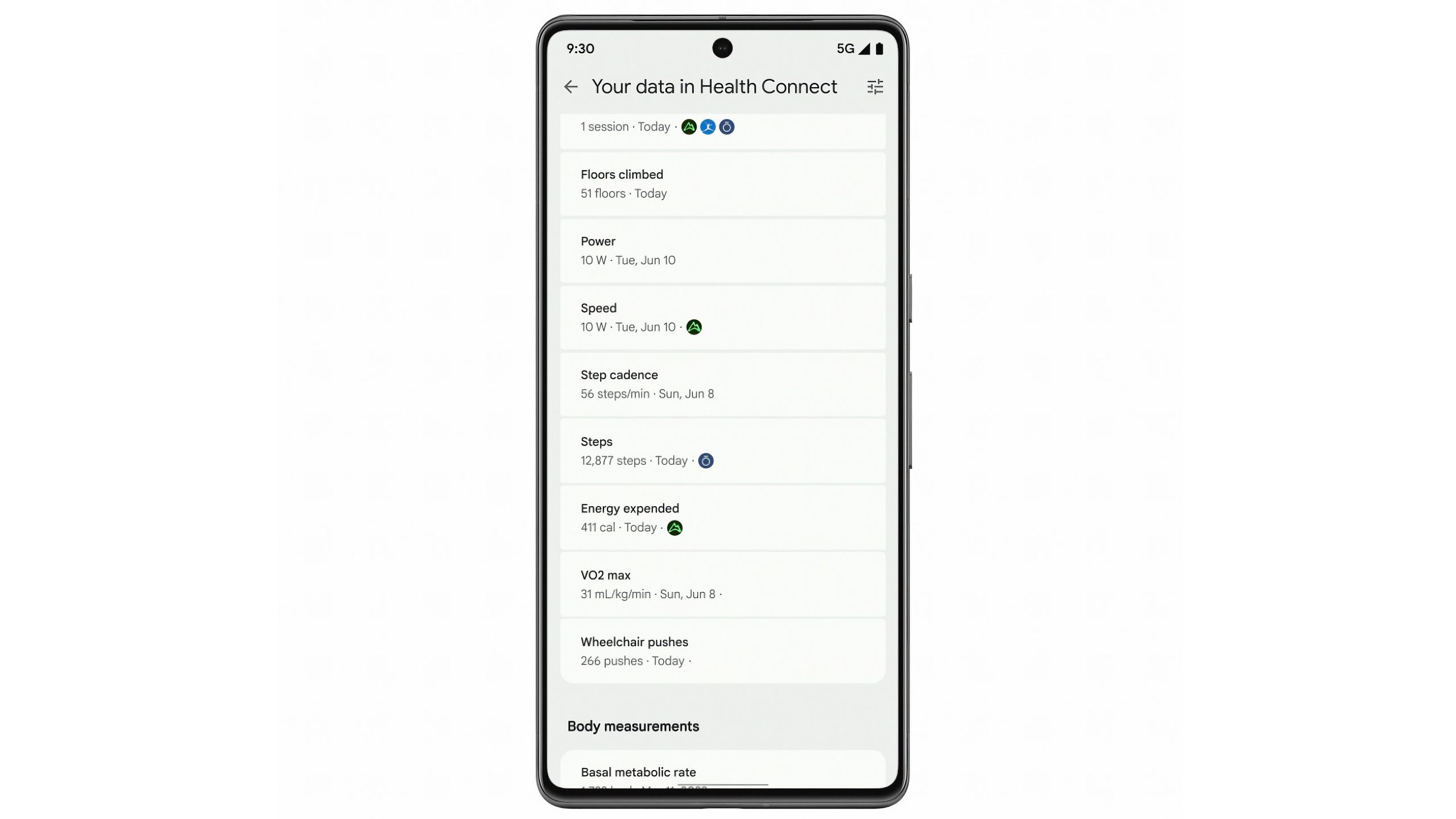

Health and wellness improvements

Google is improving the redesigned Fitbit app through Health Connect and adding data from your other wearables and apps like AllTrails, Oura Ring, and MyFitnessPal. With the improvements, you can now visit the You tab in the Fitbit app on your Android phone to see data from connected apps alongside your Fitbit data, creating a more cohesive health app. It’s similar to how the Apple Health works on the iPhone, and a welcome addition to Fitbit and Google’s health tracking features.

Credit : Source Post